Blockchain API Service Quality: The Three Metrics That Matter

The NodeReal team carried out a thorough benchmarking test which shows that NodeReal lives up to its reputation as the #1 API provider with the fastest, most stable, and scalable service on both RPC and Archive RPC.

How NodeReal Stacks Up Against Competitors

In the past, firms have frequently used performance to evaluate the service quality of the blockchain service provider, like how many requests that service provider can handle.

The truth is that it has never been easy to provide holistic evaluation statistics for a system that fundamentally consists of hundreds of computers that are dispersed around the globe.

NodeReal is working hard to deliver high-performance, predictable and reliable services. It is now possible to determine which blockchain infrastructure providers are the most dependable and quick functioning by measuring holistic metrics.

Our NodeReal team carried out a thorough benchmarking test that provides a precise indicator of our performance and how we compare to others.

Before we get into the results, let's look at the “what, why, and how” of the measurements we used.

The Metrics That Matter – Performance, Stability & Scalability Benchmarks

The performance highlights the significance of having a service that is responsive with low latency.

Performance of blockchain service is critical, especially if your dApp needs a responsive user experience. It is necessary to take a look at the performance of API services within the blockchain. The performance metrics are measured in the response time of one or more typical API requests. The response time is calculated under the same pressure level, which is defined by QPS (Query Per Second).

The stability highlights the significance of having a service that is always high-quality and consistently (stable) high.

A poor stability level within your dApp could lead to an inconsistency towards users' queries and create an overall negative experience with whatever the user wishes to do with your application. It is necessary to take a look at the stability to evaluate the performances of API services within the blockchain, e.g. the responsiveness time should be stable and deterministic at all times from different regions.

Metrics like these support the importance of scalability, as even in all-time highs and almost off-trend situations, the service should still be stable and at a standard performance.

For example. during BNB Chains' all-time high, it reached over 700,000 Query Per Second (QPS) and 2,000,000 active addresses daily. Blockchain infrastructure like your dApp must be scalable for it to become widely used in the financial and/or nonfinancial sectors. In other words, the network must be designed to support a large number of transactions per second without sacrificing its effectiveness or security. No matter how brilliant the innovation, if scalability within any Web3 application is poor, it is essentially limiting its potential as multiple users will just not be able to perform transactions simultaneously.

NodeReal Has Built the Infrastructure to Deliver Blistering Results Against These Critical Metrics

NodeReal is a one-stop shop for blockchain solutions and infrastructure that offers a quick, dependable, and scalable infrastructure for high-performance Web3 applications. But how exactly do we do this?

Our flagship product is MegaNode. MegaNode provides fast, highly scalable, and stable blockchain API services (RPC/Archive), enabling developers to focus on building dApps with confidence.

Node Virtualization

- Classical node cluster architecture

If you are a developer, you must have considered running your own node cluster to support your business. The most common way to run a cluster of nodes to improve your performance and reliability is to run the full node instances behind a load balancer. This architecture is easy to implement and can provide the most basic performance improvement and scalability.

Typical traffic flow is like below:

- One client makes a call to an API, the traffic comes to the load balancer.

- The load balancer forwards the request to the registered node service with an algorithm that calculates the following parameters.

- The Protocol

- The source IP address and source port

- The destination IP and destination port.

- The TCP sequence number

Looks good, right? Actually there are a couple of problems.

- For the high volume of requests from the same client, like data indexing, because every time you can have only one node to serve, the performance will be limited by the hardware performance of a single node.

- For any IO intensive API, the node performance is not good enough, you will find the single machine's IOPS will be your RPC API performance bottleneck.

- No cache mechanism to improve your query operation performance.

- The scalability of your node service is very difficult if not impossible. Because of the lengthy time of data synchronization, it is very difficult to scale out when requests increase very fast .

- Data can be inconsistent if clients query the same API from different node instances.

2. The MegaNode Architecture

Instead of simply putting blockchain nodes behind a load balancer, we redesigned the architecture to make our node service the fastest, most reliable, and highly scalable platform in the market.

We break the monolithic node into multiple microservices that serve a single role, which makes our service scalable and reliable. Before all of these services, there is a coordinator to handle the ingress traffic, and call the supporting services to provide the corresponding data to the client.

A typical flow is like below:

- one app requests for transaction data, it sends the request to our loadbalancer, the load balancer will forward the request to our coordinator service.

- The Coordinator will forward the request to the corresponding service to retrieve the data, and then the service will query the data and return to the coordinator.

- If any service hits a threshold of computing resource usage, a new instance of the service will be automatically scaled out.

Benefits of the node virtualization

- The database we use is scalable to have better performance, therefore single node hardware performance will not be the bottleneck anymore.

- A CQRS pattern is also implemented to have better data retrieving operations.

- Virtualization also makes caching possible, we can cache some hot data into our cache to boost the performance.

What Happened When We Put This to The Test?

At NodeReal, we set ourselves the challenge every day to provide the best possible node infrastructure for our users.

To do this, we must use a comprehensive testing framework to evaluate not only the performance but also the stability and scalability of the network as well.

In our testing, we query our node service from 3 locations and increase the QPS from 1 to 10 to test not only the performance but the stability and scalability. As the API getLog is an IO intensive operation we choose this API to test to have a better view under the high pressure of IO request what the performance and stability will be like.

There are a couple of metrics that may need to be noticed.

- average latency

- TP90 latency, which is 90% of all requests.

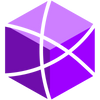

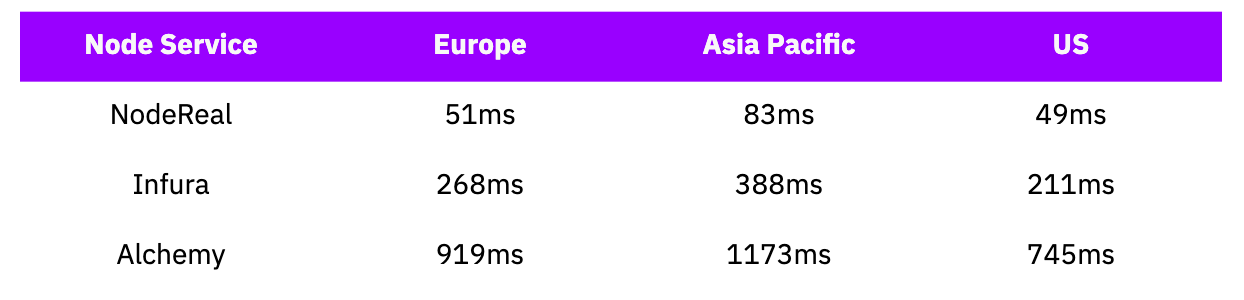

Test A – Query eth_getLog on QPS of 1

In this test

- The eth_getlogs is an IO intensive request, and we compared NodeReal, Infura and Alchemy.

- The block range is from latest - 100 to latest.

- Set the filter to USDT transfer within the range.

- Test duration is 180 seconds.

- NodeReal in all three regions is the fastest node service compared with Infura and Alchemy.

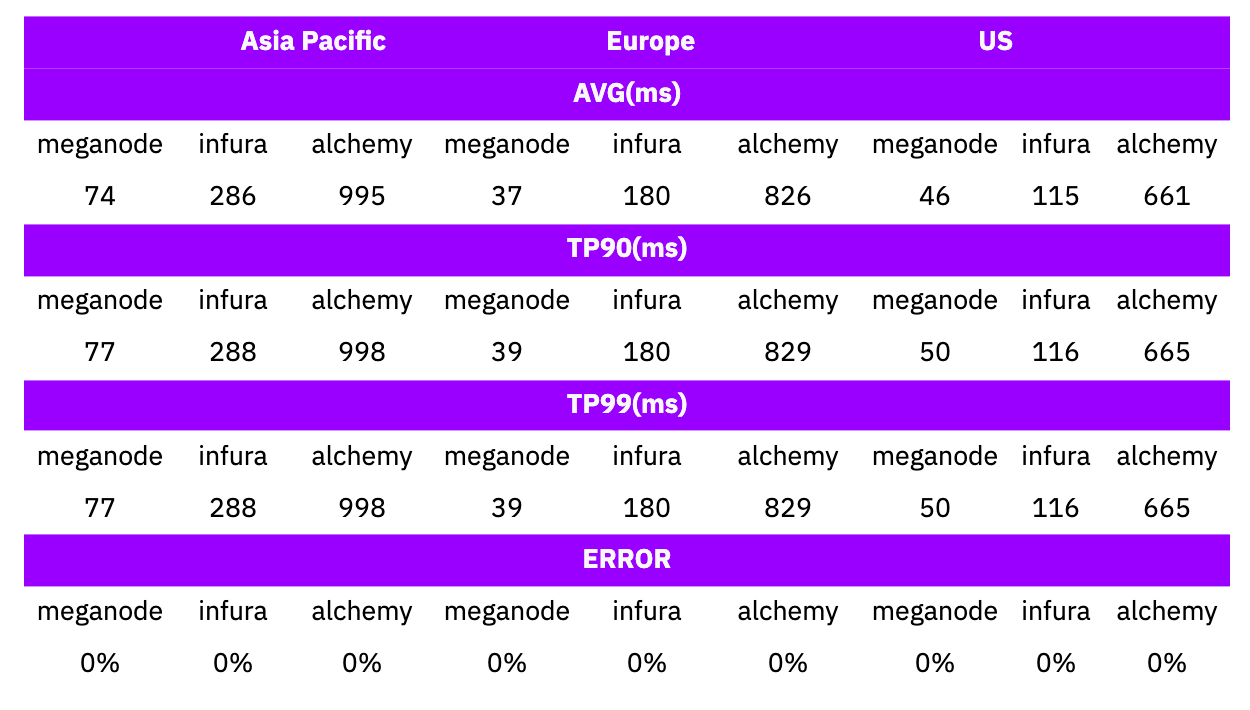

Test B – Query eth_getLog on QPS of 10

In this test

- The eth_getlogs is an IO intensive request, and we compared NodeReal, Infura and Alchemy.

- The block range is from latest - 100 to latest.

- Set the filter to USDT transfer within the range.

- Test duration is 180 seconds.

- NodeReal in all three regions is the fastest node service compared with Infura and Alchemy.

Because of too many requests, alchemy hit the rate limit with error code 400 Bad Request returned.

Conclusion

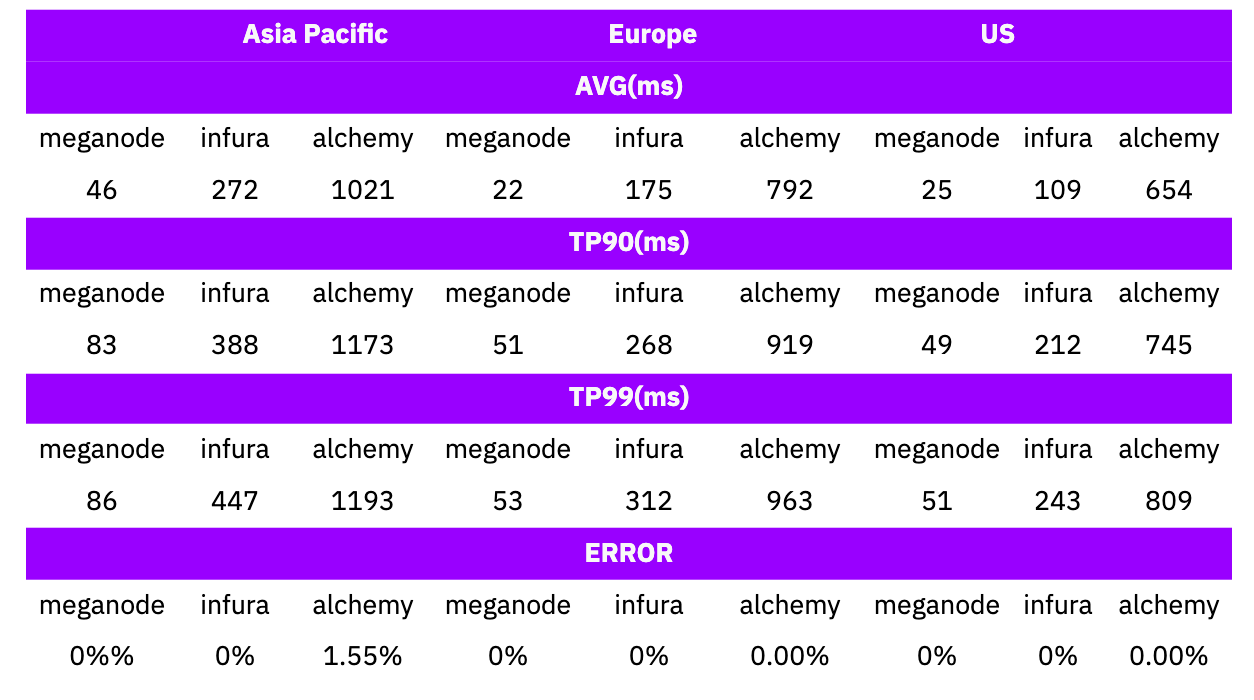

As the getlog operation requires big IO query, both Infura and Alchemy response time under the pressure of 1 QPS is in the range of 100ms to 288ms and 660ms to 998ms, but the range of NodeReal is in the range of 37-74 ms, which is much lower than Infura and Alchemy.

The TP90 latency is under 1 QPS usage.

When increased the QPS to 10, the response time of TP90 is like below

First, the performance of all three node services, NodeReal is the fastest in the three hottest regions under the QPS of 1.

Then let's talk about stability.

When we increase the QPS from 1 to 10, the service quality is as below.

Based on the results above, it is clear that NodeReal’s MegaNode is leading the pack among the competition – living up to its reputation as the #1 API provider with the fastest, most stable, and scalable service on both RPC and Archive RPC. For real-time performance, you can always check it out from our status page.

About NodeReal

NodeReal is a one-stop infrastructure and solution provider that embraces the high-speed blockchain era. We provide scalable, reliable, and efficient blockchain solutions for everyone, aiming to support the adoption, growth, and long-term success of the Web3 ecosystem.

Join Our Community

Join our community to learn more about NodeReal and stay up to date!